Neural Memory Architecture

Published:

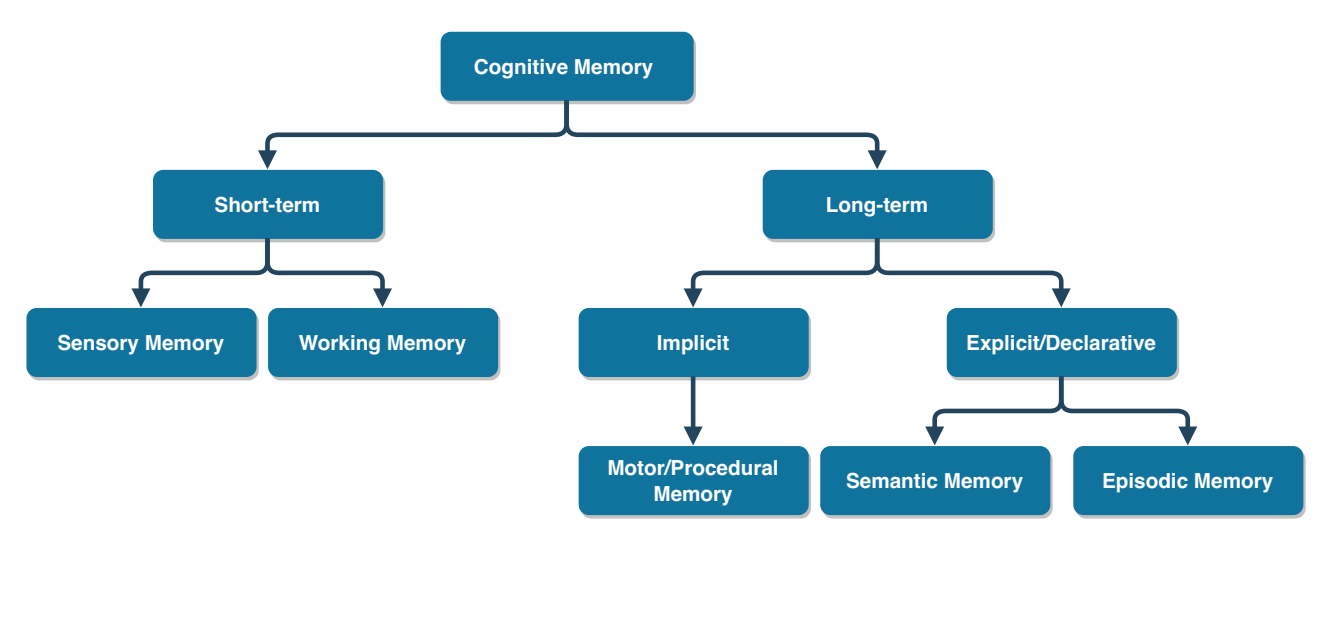

Memory is the core of intelligence. Thanks to memory, human can effortlessly recognize objects, recall past events, plan the future, explain surrounding environments and reason from facts. From cognitive perspective, memory can take many forms and functionalities (see figure below).

To have human-like AI, we should let AI have human-like memory. But, how’s that possible or is it necessary? With the rise of deep learning, AI is more advanced, yet less human-like. By greedily encoding data into deep neural networks, AI excels at pattern recognition and can surpass human in many areas (playing GO, video games, image recognition, etc.). The role of memory in these deep neural networks is inevitable and still unclear. The lack of a concrete and complete memory architecture creates difficulties for modern AI to match human performance in other settings such as life-long learning, reasoning, few-shot learning and systematic generalization.

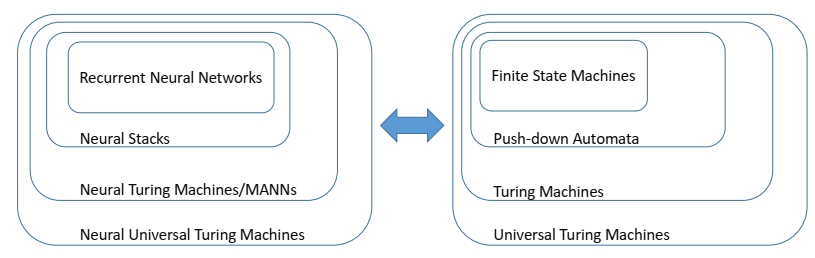

From computational perspectives, neural networks can compute just like computers and computers also have memory. There is an interesting analogy between theoretical computing models and neural network architectures regarding memory capacity (see figure below).

To achieve a capability of universal computation, AI should have memory as well. With computational memory, we can teach AI to invent algorithms, solve multiple tasks with single architecture, and optimize optimization process or simulation processes. They are important problems for science and industry sector, yet, still immature for AI. Unlike human memory, computational memory is well-defined. However, there exists no mechanism that allows AI to learn, evolve and scale with theoretical memory models. “How to efficiently train AI with such memory models” remains an open question.

This project addresses the weakness of modern AI thorough exploring novel memory architectures motivated by cognitive and computational insights. As such, we investigate various aspects of memory capabilities such as continuity, hierarchy, imagination, reasoning, algorithmics and memorization

For now, some of our works have touched some of these aspects:

- Multi-memory Architecture

- Design neural network architectures with multiple external memory and access mechanisms

- Solve multi-view, multi-modality problems

- Memory for Memorization and Reasoning

- Build neural memory mechanisms and operators that enable creations of the long-term past and reasoning capability

- Applicable to graph learning, question answering and algorithmic reasoning

- Program Memory

- Design neural networks with new capability of accessing memory of programs

- Perfect for multi-task, continual and conditional computing

- Deep Generative Memory

- Learning to imagine with long-term memory

- Potential for video generation